GPT-3 (Generative Pre-trained Transformer 3) has been around for a while now. You may have heard of it, or even seen it in action, despite not knowing that you’re looking at yet another exciting implementation of this particular model. At its core, GPT-3 is about generating text.

But it’s not just any random text generator—it bases its output on intensive training that took a lot of pre-existing text from the internet. Combined with cutting-edge machine learning algorithms, the research team behind GPT-3 has produced something amazing.

It’s not an exaggeration to say that they may have started a whole new era in procedural generation.

An Overview of Machine Learning

Machine learning is a statistical approach to training computer algorithms to perform specific tasks. The basic idea revolves around a model which is trained on data, and is then fine-tuned to work with similar sets of data in the future.

A common example is a model for detecting specific objects in images—like cats. The algorithm would train on a huge number of images, being told which ones contain cats and which ones don’t. The ultimate goal is that it will be able to give a correct answer when presented with any picture, including ones that were not present in its original training data.

Machine learning enjoys heavy usage in fields where manually developing algorithms to handle certain tasks is infeasible. And while it’s not the “magic wand” that some seem to see it as (in the sense that it’s not suitable for solving all types of problems), it’s still a very powerful tool when used the right way.

What Does GPT-3 Do?

GPT-3 is an advanced model specifically developed to generate text that imitates something a human might write. While there has been extensive research in the field of text generation for years, GPT-3 was a huge leap forward in terms of quality and performance.

The model was extensively trained on content gathered from the internet, and as a result, can give the impression of having actual “intellect”, in the sense that it’s aware of existing logical connections between topics and keywords. However, that’s just an illusion—at the end of the day, the text it generates is still based on articles and posts the algorithm has gone through during its training.

That’s not to undermine its impressiveness though. With just a small prompt – one or two sentences are often enough—GPT-3 can produce entire articles that read and flow very well, and more importantly, provide new information to the reader. People have been experimenting with prompts of all kinds—including more philosophical ones—and the results are often interesting.

The quality of GPT-3’s output is so good that there have been multiple incidents where researchers have convinced the public at large that they were reading something written by a human. In one case, The Guardian even published an article entirely written by GPT-3, discussing how humans should not feel threatened by it.

The Various Applications of the New Technology

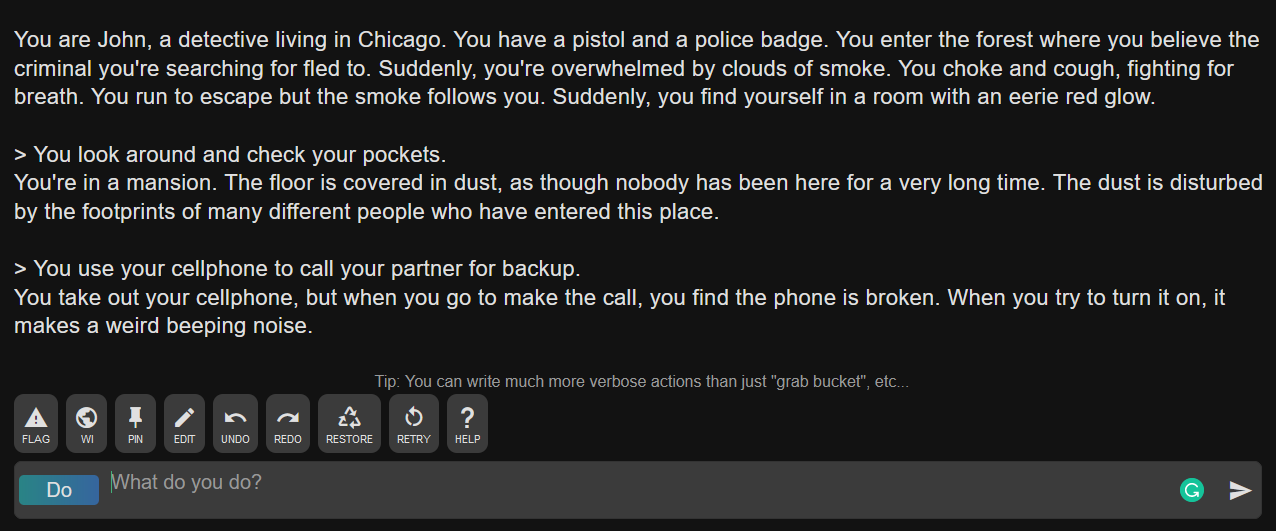

GPT-3 immediately found uses in many circles. Its use goes beyond producing text for articles. Some have created advanced, lifelike chatbots based on it. AI Dungeon is a game that uses GPT-3 to generate infinite stories that receive free-form input from the player.

Imagine a choose-your-own-adventure book where you can type in anything you can think of, and the book will continue the story based on that.

People have even started experimenting with applications that go beyond text. For example, hooking up a GPT-3 generator to a 3D modeling application, and feeding it prompts like “a room with a bed and a sofa”. Some have attempted to generate program code with it, though results in this area have been far less impressive.

Ethical and Moral Implications

There are many questionable implications of GPT-3 that need to be considered however. Fake news is not the core problem here—and it’s not even a very serious issue with regards to abusing GPT-3.

Some have brought up the possibility of using the tool to radicalize others at a massive scale. It’s true that GPT-3 can significantly speed up the spread of information, and the bias is in the hands of whoever runs the algorithm.

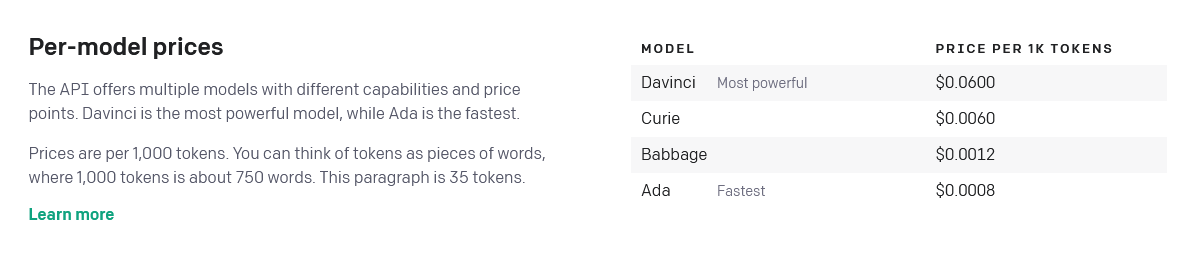

There are also concerns with ownership and licensing. After GPT-3 was revealed publicly, it was announced that Microsoft would have an exclusive license for its use. The tool is still offered as a subscription service, but the source code, training, and other similar tasks are handled by license holders at this point.

And then we have some more unusual scenarios. Some of you might have seen an episode of the TV show “Black Mirror” (which deals with the stranger side of modern technology) where a company was selling personalized chatbots, designed to imitate the behavior of deceased relatives. The company achieved this by training the bots on data like IM logs, emails, and other text conversations.

And that’s not fantasy anymore—it’s completely feasible with enough training data. In fact, it already exists in a slightly different form—AI|Writer is a tool that allows anyone (though access is limited right now) to “talk” to famous historic figures by using this exact method.

Is This the Future of Written Content?

While the technology is restricted right now, it’s only a matter of time before it becomes available to anyone. With that in mind, are we headed for a future where all text content will be generated by AI? Some seem to believe that, but not everyone is convinced. Training remains a big issue on that front.

GPT-3 may be able to produce coherent articles on simple, popular topics that can be easily researched with a few searches, but it still falls short where actual in-depth knowledge is required.

The most realistic outcome is probably somewhere in the middle. Like many other AI tools, this could turn out to be a great assist tool. Writers and other creatives can use it to relieve themselves of menial, repetitive work, and focus their full energy where it’s needed most. It could help writers improve their flow and structure, and could find a great place in the field of content recommendation as well.

Like It or Not, It’s Here to Stay

Whether any of us agree with where this is going is becoming irrelevant at this point. It’s clear that the technology is going to be used more and more, and the best we can do is to adapt and prepare for what’s coming.

Those who can benefit from what GPT-3 brings to the table should already be looking into opportunities to work with it. And everyone else needs to become a little more skeptical about the things they’re reading—which is already a good attitude in the current climate anyway.

Comments

Post a Comment